Sponsor: Do you build complex software systems? See how NServiceBus makes it easier to design, build, and manage software systems that use message queues to achieve loose coupling. Get started for free.

When should you use blocking or non-blocking API calls? Blocking synchronous communication vs non-blocking asynchronous communication? Should commands fire and forget? What’s the big deal of making blocking synchronous calls between services?

YouTube

Check out my YouTube channel where I post all kinds of content that accompanies my posts including this video showing everything in this post.

Communication

There are 3 different forms of communication that I’m generally thinking of. Commands, Queries, and Events. I’m going to cover all three and the pros/cons of using blocking synchronous or non-blocking asynchronous with each of them.

Commands

With commands, it’s all about users’/clients expectations. When the command is performed, do they expect to get a result immediately and the execution be fully complete? Because of this expectation, often times commands are blocking synchronous calls.

If the client was requesting a state change to the service, the expectation is that if they want to then perform a query afterward, they would expect to read their write. There would be no eventual consistency involved. Blocking synchronous calls can make sense here.

Where asynchronous commands can be useful is if the execution time is going to be longer than the user might want to wait. This is an issue with blocking calls as the client could be waiting to get a response back to know if the command is completed. As an example, if the command had to fetch and mutate a lot of data in a database that could take a long time. Or perhaps it has to send 1000s of emails which could take a lot of time to execute.

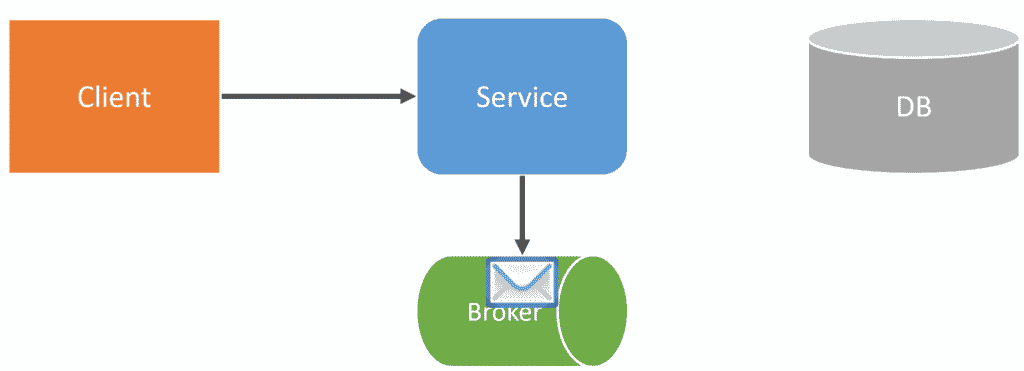

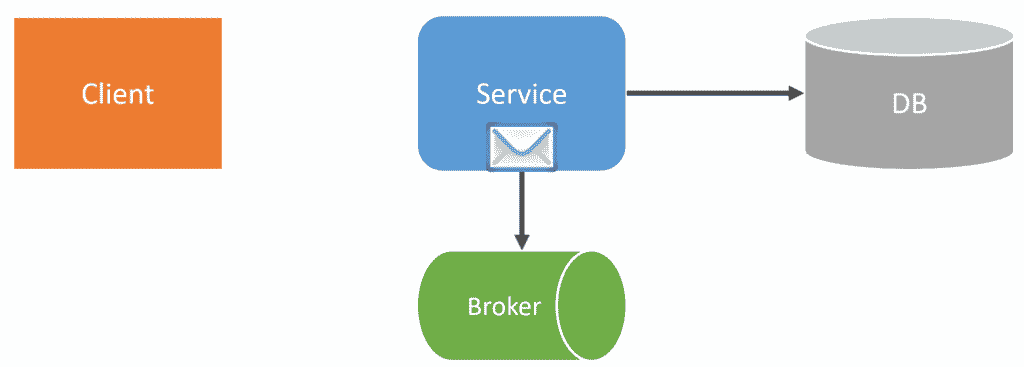

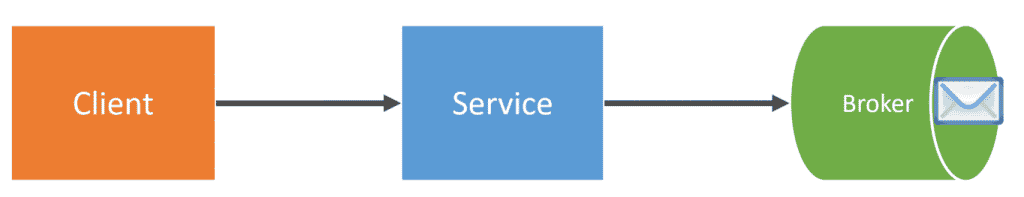

Another situation where async commands are helpful is when you want to capture the command and just tell the user it has been accepted. You can then process the command asynchronously and then let the user know asynchronously that it has been completed. An example of this is placing an Order on an e-commerce site. When the user places an order, it doesn’t immediately create the order and charge their credit card, it simply creates a command that is placed on a queue.

Once the message has been sent to the broker, the client is then told the order has been accepted (not created). Then asynchronously the process of creating and charging the customer’s credit card can occur.

Once the asynchronous work has been completed, typically you’d receive an email letting you know the order has been created. Depending on your context and system, something like WebSockets can also be helpful for pushing notifications to the client to let them know when asynchronous work has been completed. Check out my post on Real-Time Web by leveraging Event Driven Architecture

Queries

Almost all queries are naturally request-response. They will be blocking calls by default because the purpose of a query is to get a result.

This is pretty straightforward when a service is interacting with a database or any of its own infrastructure within a logical boundary, such as a cache.

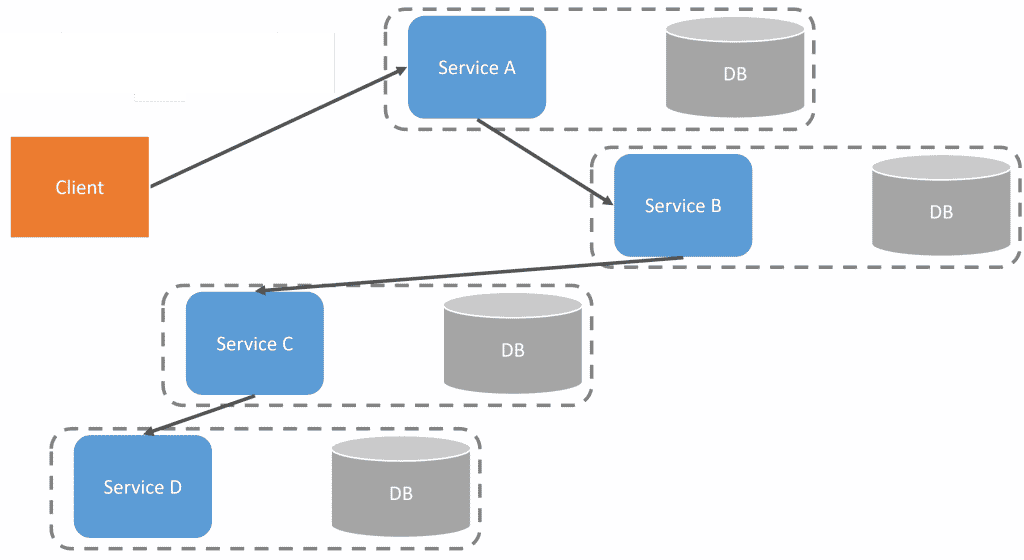

Where this totally falls apart is when you have services that are physically deployed independently and they are calling each other using blocking synchronous communication (eg, HTTP, gRPC).

There are many different issues with service-to-service direct RPC style communication. I cover a bunch of them in my post REST APIs for Microservices? Beware!

One issue is latency. Because you don’t have really any visibility into the entire call stack without something like distributed tracing, you can end up with very slow queries because there are so many RPC calls being made between services to build up the result of a query.

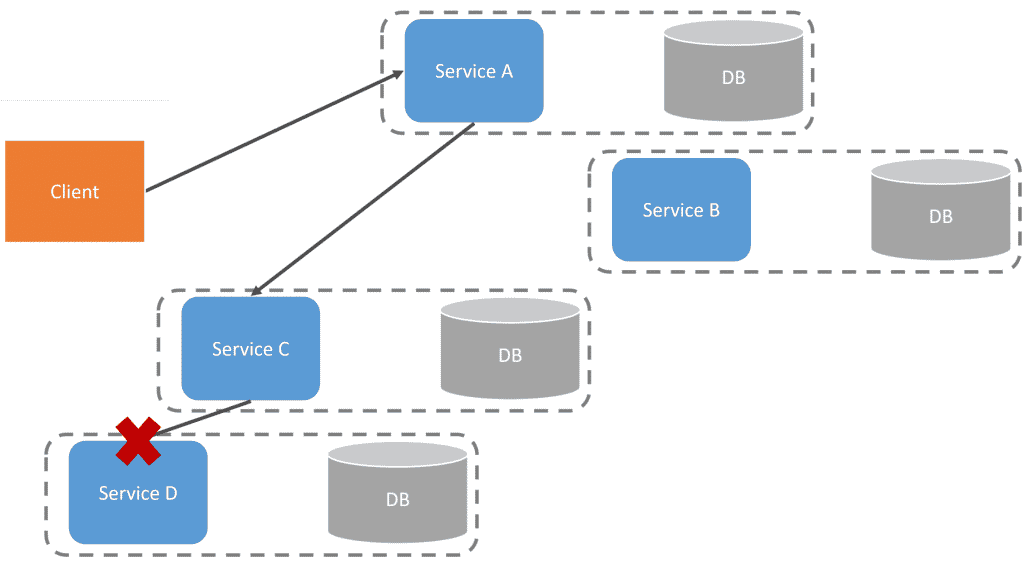

The second issue and the worst offender is tight coupling. If a service is failing or unavailable, your entire system could be down if you aren’t handling all these failures appropriately. If Service D has availability issues, this will affect service A indirectly because it’s calling service C which requires service D.

Congratulations, you’ve built a distributed monolith! Or as I like to call it, a distributed turd pile.

So how do you handle queries since they are naturally blocking and synchronous? Well, what you’re trying to do is view composition.

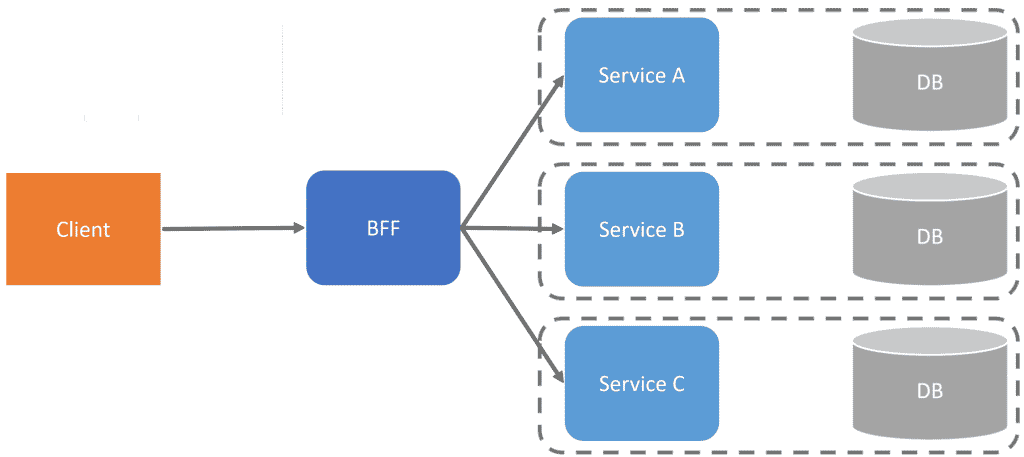

There are different ways to tackle this problem, one of which is to have a gateway or BFF (Backend-for-Frontend) that does the composition. Meaning it is the one responsible for making the calls to all the services and then composing the results back out to the client.

This isn’t the only way to do UI/ViewModel composition. There are other approaches such as moving the logic to the client or having each logical boundary own various UI components. Regardless, each service is independent and does not make calls to other services.

As for availability, if Service C is unavailable, that portion of the ViewModel/UI can be handled explicitly.

There is one option that I won’t go into in this post, which is asynchronous request-reply. This allows you to leverage a message broker (queues) but still have the client/caller block until it gets a reply message. Check out my post Asynchronous Request-Response Pattern for Non-Blocking Workflows.

Events

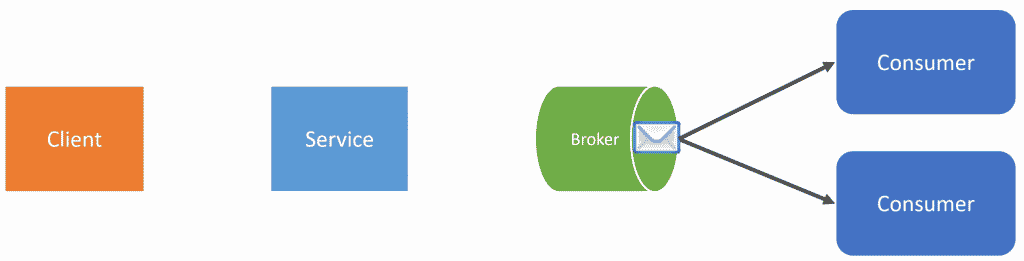

It may seem obvious (or not) but events generally should be processed asynchronously. The biggest reason why is because of isolation.

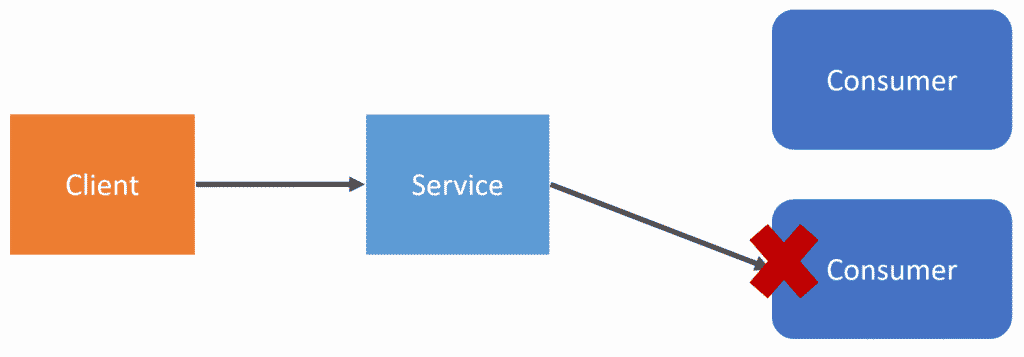

If you publish an event in a blocking synchronous way, this means that the service calling the consumers when the event occurs has to deal with the failures of the consumer.

If there were two consumers, and the first consumer handled the event correctly, but the second consumer failed, the service/caller would need to handle that failure so it doesn’t bubble up back to the client. Also without any isolation, if the consumer takes a long time to process the event, this affects the entire blocking call from the client. The more consumers, the longer the entire call will take form the client. You want each consumer to execute/handle the event in isolation.

The publisher should be unaware of the consumers.

It doesn’t care if there are any consumers or if there are 100. It simply publishes events.

Blocking or Non-Blocking API calls?

Hopefully, this illustrated the various ways that blocking synchronous and non-blocking asynchronous can be applied to Commands, Queries, and Events.

Join!

Developer-level members of my YouTube channel or Patreon get access to a private Discord server to chat with other developers about Software Architecture and Design as well as access to source code for any working demo application that I post on my blog or YouTube. Check out the YouTube Membership or Patreon for more info.