Sponsor: Interested in learning more about Distributed Systems Design? Enter for a chance to win a 5 day Advanced Distributed Systems Design course led by Udi Dahan founder of Particular Software, and creator of NServiceBus.

In this section, I’m going to cover how to deal with scaling SignalR when in a server farm behind a load balancer.

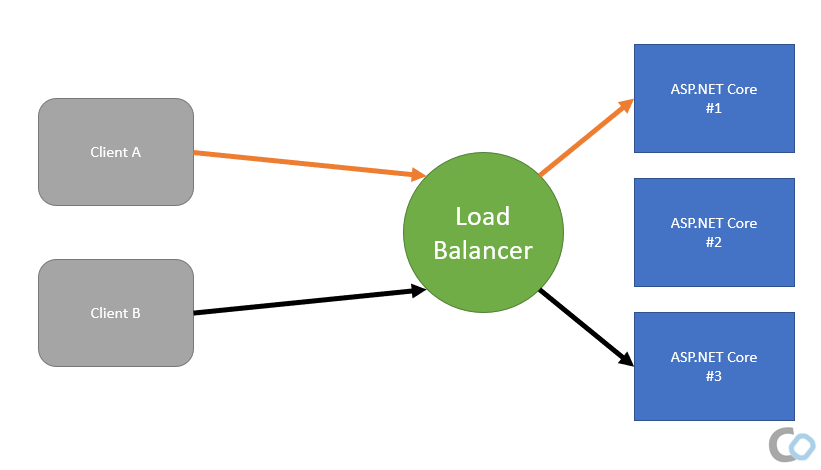

Typically to scale we would introduce a load balancer and additional instances of our application.

Introducing multiple instances of our application with SignlaR behind a load balancer is a problem because SignalR keeps track of connected clients in each instance.

This blog post is apart of a course that is a complete step-by-setup guide on how to build real-time web applications using ASP.NET Core SignalR. By the end of this course, you’ll be able to build real-world, scalable, production applications using the tools and techniques provided in this course.

If you haven’t already, check out the prior sections of this course.

SignalR Scaling

The diagram below illustrates that we have 3 instances of our ASP.NET Core application behind a load balancer. When Client A makes a SignalR connection it is passed off to Instance #1. When Client B connects, it may get passed to Instance #2. Both instances are unaware of the other connected clients and there will be no communication between both.

Meaning, if you call Clients.All.SendAsync() from a Server Hub or HubContext, you will only be sending to the clients connected to that instance.

Redis

You can use Redis as a

If you’re using ASP.NET Core 2.2 or later the recommended package to use is: Microsoft.AspNetCore.SignalR.StackExchangeRedis which relies on StackExchangeRedis 2.x.

If you are using ASP.NET Core 2.1, you can use

Microsoft.AspNetCore.SignalR.Redis which relies on StackExchangeRedis 1.x.

Configuring

Depending on which package you are using you must call to add Redis to your AddSignalR() in your startups ConfigureServices()

You can also specify configuration options. In this example, I’m

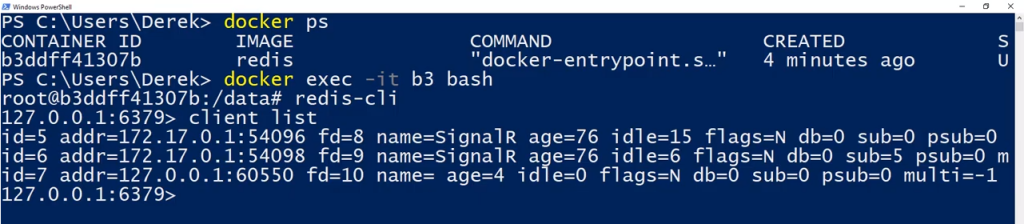

To confirm my SignalR Hub is now using Redis, I’ll take a look at the connections in my Redis instance I’m hosting in a Docker container.

Sticky Sessions

There is one caveat when using a Redis backplane. If you are using anything other then WebSockets, meaning you are using Server-Sent Events or Long Polling, you must configure your load balancer to support sticky sessions. Sticky sessions are also known as client affinity and can be enabled in both Azure and AWS.

Get The Course!

You’ve got several options:

- Check out my Practical ASP.NET Core SignalR playlist on my CodeOpinion YouTube channel.

- Access the full course now by enrolling for free on Teachable.

- Follow along with the blog post series here on CodeOpinion.com

| |

Source Code

All of the source code for this blog post and this course is available the Practical.AspNetCore.SignalR repo on GitHub.