Sponsor: Interested in learning more about Distributed Systems Design? Enter for a chance to win a 5 day Advanced Distributed Systems Design course led by Udi Dahan founder of Particular Software, and creator of NServiceBus.

Web-Queue-Worker is an excellent architecture pattern you can add to your toolbox. It’s just a pattern and can work with a monolith, modular monolith, microservices, or whatever. It provides many benefits for scaling by moving work into the background and if you have long-running jobs, workflows, or even recurring batch jobs.

YouTube

Check out my YouTube channel, where I post all kinds of content accompanying my posts, including this video showing everything in this post.

Flow

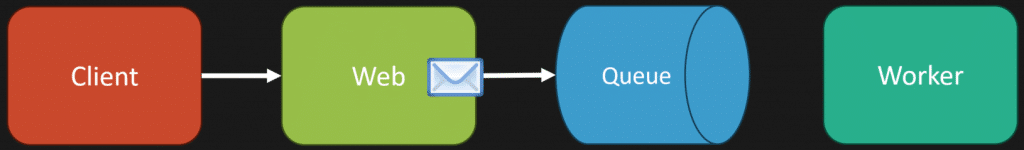

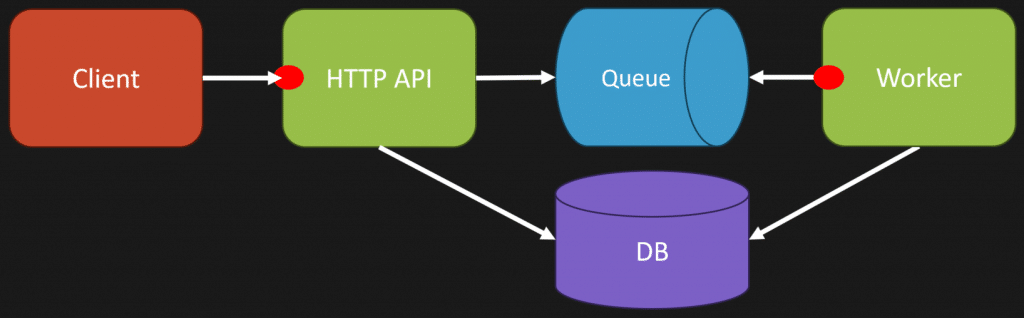

Here’s the flow of the Web-Queue-Worker pattern in its simplest form. We have a client make a request to our HTTP API (Web), which generates a message that is placed in a queue. It could also be interacting with a database or whatever it needs to process, and then returns back to the client.

Separately, we have a Worker—this could be another process or thread—that will consume and process the message from the queue.

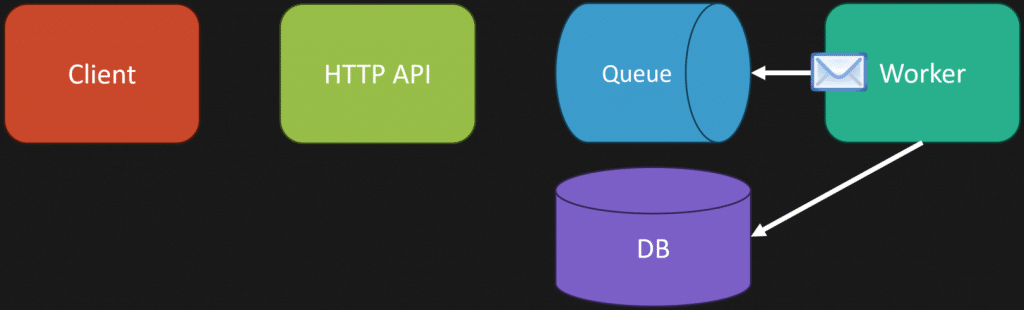

The worker is using the message as a means to understand what work needs to be performed. This could be related to some operation that interacts with a database, some 3rd party service like email, etc.

Async Everywhere

The first problem people often encounter when applying this pattern is using it everywhere or in the wrong places. Essentially, the web-queue-worker pattern moves the work, or a portion, to run asynchronously.

However, not every use case should be asynchronous. Your HTTP API performing all the required work before turning to the client is not wrong. A simple example of this is a query that is purely returning data from a GET request. Queries are inherently request-response. Commands can also be request-response when the client needs an immediate response.

Using the web-queue-worker pattern means moving work to be processed asynchronously. Not all work should be done asynchronously; use it where appropriate.

One place where it is beneficial to move work to be processed asynchronously is when the client doesn’t need to know when the work is completed or they do not need to wait for the work to be completed.

Another is avoiding long-running HTTP requests. This means that requests might take a significant amount of time to complete.

Push

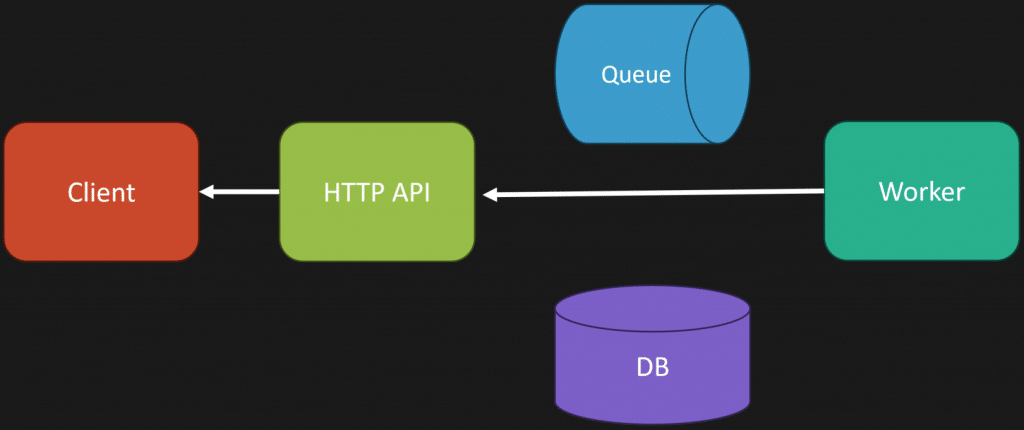

However, if you do have work that can be done asynchronously but also need to communicate back to the client that the work has been completed or the result of the processing, how do you do that? For more detail, you can check out my post on avoiding long-running HTTP requests, but a few options are using communication mechanisms you already have in your system. One of those might be WebSockets.

In the .NET space, using SignalR with a Redis backplane makes this relatively trivial, even in a scaled-out environment. Any process can communicate with the client via a WebSockets connection, as it’s routed to the HTTP node with the connection.

It doesn’t always need to be overly technical. A great example of this is with e-commerce. When you place an order with your credit card details, that transaction to the payment gateway doesn’t happen; the request to the HTTP API (web). It’s often done using the web-queue-worker pattern, where the credit card transaction is done asynchronously. If the transaction fails, you’ll likely receive an email to notify you of the failure. That’s a simple and effective way to communicate the result of the asynchronous work in that context.

Scaling

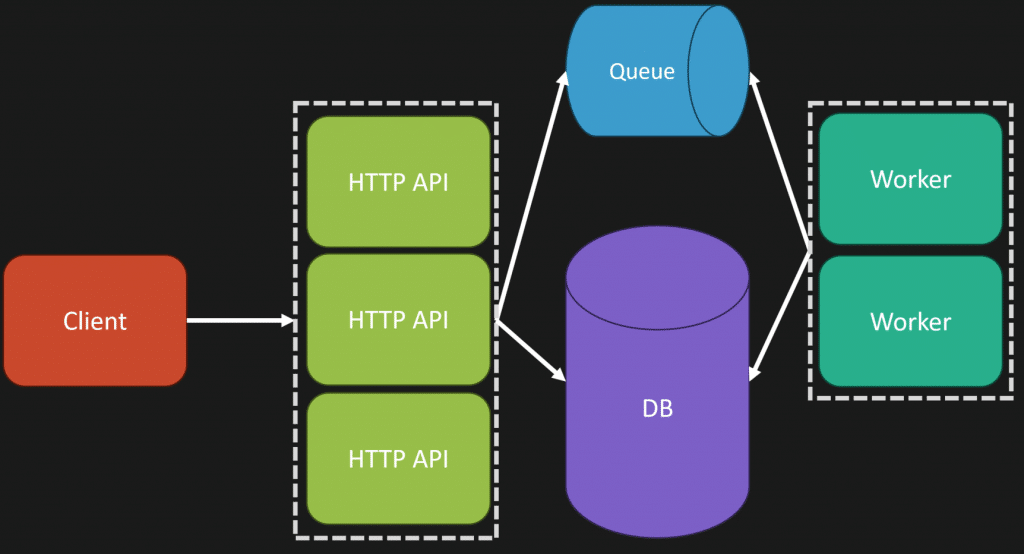

If you’re used to scaling out your web tier, this means adding more instances of your HTTP API behind a load balancer. The same applies to your workers who are consuming messages off the queue.

This is called the competing consumers pattern. You can scale out by adding more instances of your workers to perform more work off your queue concurrently, increasing throughput.

One important note about scaling is that it’s often easy to “move the bottleneck.”

If you increase the number of HTTP API instances to handle more inbound HTTP requests, that means you’ll likely be adding more messages to the queue. So now you’ve moved the bottleneck to the workers.

So, you add more workers to handle the increased messages in the queue. But now you’ve probably moved the bottleneck to the database. Which means you may need to handle scaling it up or out depending on your use-case. Just be aware of moving the bottleneck when scaling.

Codebase

Your HTTP API (Web) and Worker don’t have to be different codebases. They can be the same underlying codebases with separate entry points.

Meaning you might build two different executables that are composed of the same underlying code, the only difference is the entry point.

The HTTP API entry point is HTTP. The Worker’s entry point is pulling messages off the Queue.

If you turn an HTTP request into the same type of request that your worker handles, all your code for handling the requests is the same regardless of the entry point. One way I like to think about it is turning an HTTP request into an application request. Turn the message off the queue into that same application request. This way, you’re agnostic of the entry point when you handle the request to perform the work.

This still allows you to scale each separately while simplifying the overall architecture of how you process a request.

Task Scheduler

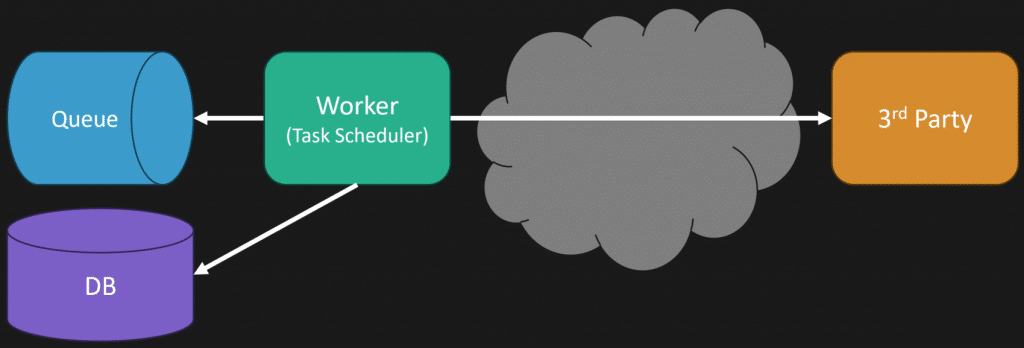

Another benefit of the web-queue-worker pattern is using your queue and worker as a task scheduler. Everyone has probably worked in a system where you must perform scheduled or recurring jobs like batch jobs.

An easy example is often integrating with a third-party service to collect or push data on a nightly basis. Using a queue and a worker is a great way to accomplish this. Most queues have a delayed delivery mechanism that allows you to queue a message but not have it available until a specified timeout (delay).

Web-Queue-Worker

This isn’t about a monolith, microservices, or any architectural style of deployment. You can use the web-queue-worker pattern along with many other architectural patterns and styles. Web-queue-worker is about moving work that’s appropriate to be executed asynchronously.

Join CodeOpinon!

Developer-level members of my Patreon or YouTube channel get access to a private Discord server to chat with other developers about Software Architecture and Design and access to source code for any working demo application I post on my blog or YouTube. Check out my Patreon or YouTube Membership for more info.