Sponsor: Using RabbitMQ or Azure Service Bus in your .NET systems? Well, you could just use their SDKs and roll your own serialization, routing, outbox, retries, and telemetry. I mean, seriously, how hard could it be?

Caching ain’t easy! There are many factors that add to the complexity of caching. My general recommendation is to avoid caching if you can.

However, caching can bring performance and scaling which you might need. If you’re starting to use a cache in your system here are some things to think about. Adding a cache isn’t that trivial and requires some thought about caching strategies, how to invalidate, and fallbacks to your database. Caching can improve performance and scalability, but can also bring your entire system down if it’s failing.

YouTube

Check out my YouTube channel where I post all kinds of content that accompanies my posts including this video showing everything that is in this post.

Strategy

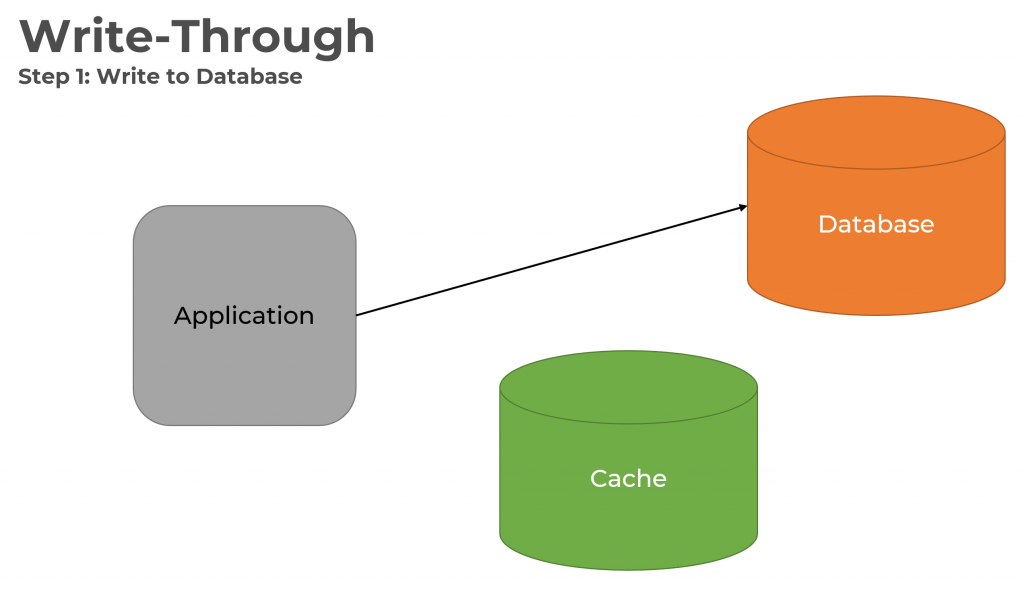

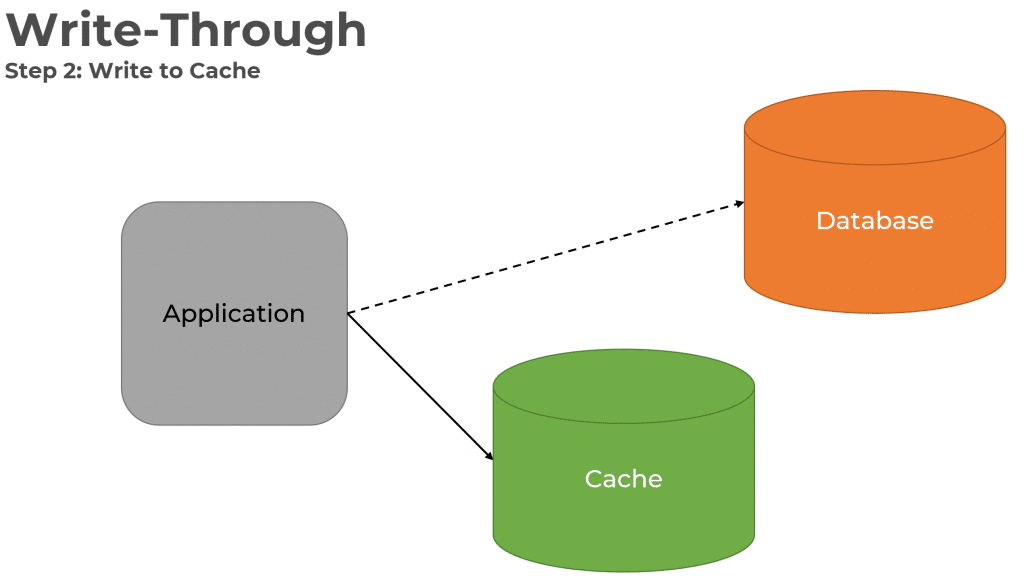

The first thing to think about is the caching strategy. The two most common methods that I’ve noticed in code-bases are the write-through and cache aside methods.

The write-through method is when your application writes to its primary database, and then immediately updates the cached value. Meaning if you add a new record to your database, you immediately add the equivalent value to the cache. If you were to update a record, you would immediately update the cached value.

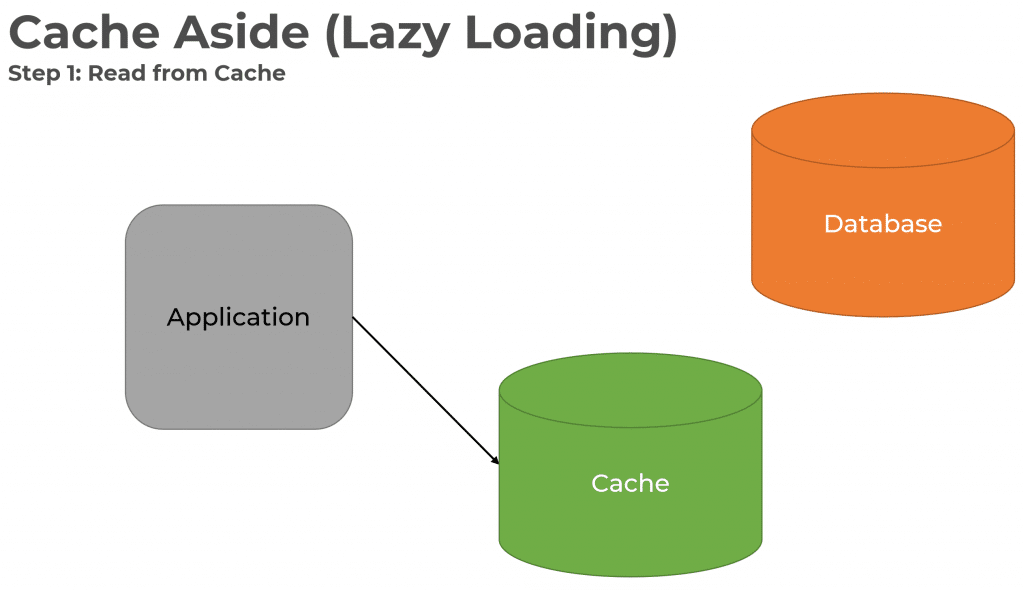

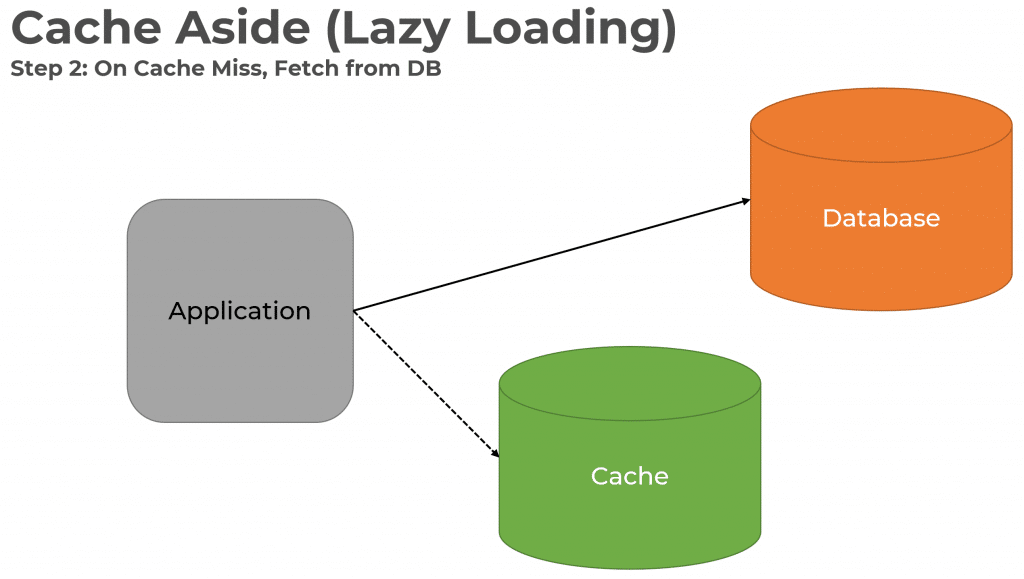

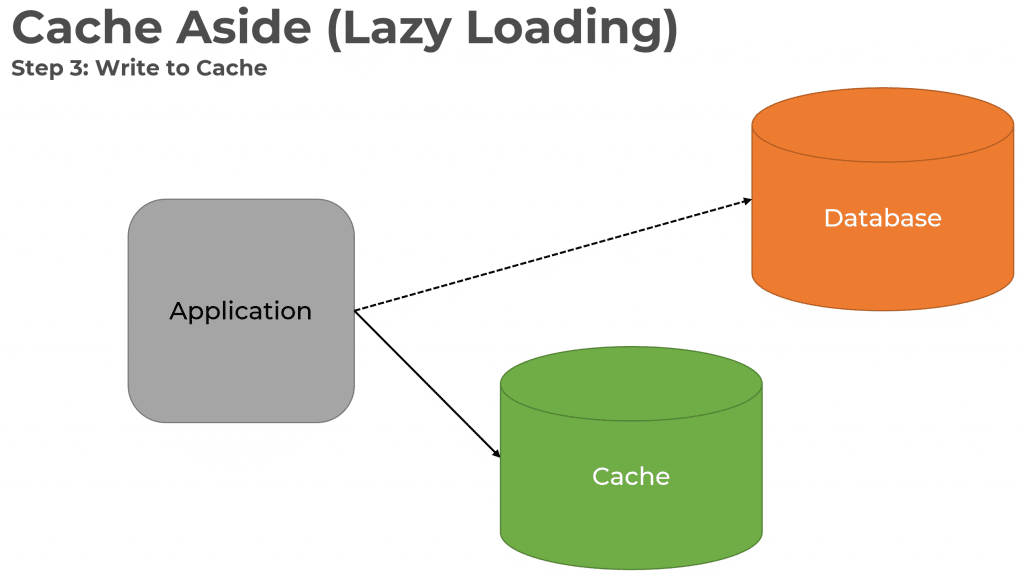

The second method most often used is the Cache Aside (Lazy Loading) method. This can be used in-conjunction with Write-Through or can be used by itself.

When the application needs something from cache, it first tries to retrieve it. If it does not exist (cache miss), it will then hit the primary database. Then it will write the value to the cache. Essentially you’re lazy loading the cache when data is requested for values that are not in the cache.

Invaliding the Cache

There are only two hard things in Computer Science: cache invalidation and naming things. -Phil Karlton

https://www.karlton.org/2017/12/naming-things-hard/

If you’re not using the write-through method, then that means your cache is stale when data gets updated in your primary database.

There are a couple methods I’ve used to invalidate the cache (remove the value from cache) and let the cache aside (lazy loading) method do it’s job.

Cache Expiry (TTL)

Most caches have the ability to expire a cached value after a period of time (time to live). When this occurs by the cache, the next call for an expired item will have to go through the 3 steps of the lazy loading method to re-populate the cache.

Async Messaging

The second method is using asynchronous messaging to notify another process that data has changed and to invalidate the cache.

This requires you to already be using messaging (events) and have a well defined API on where data is mutated in your system. If you have any external system modifying data within your database, you will not be able to emit an event everytime data is changed.

If you’re using something like Entity Framework, you could override the SaveChangesAsync to look at the ChangeTracker to determine which entities have changed and publish events.

Failures

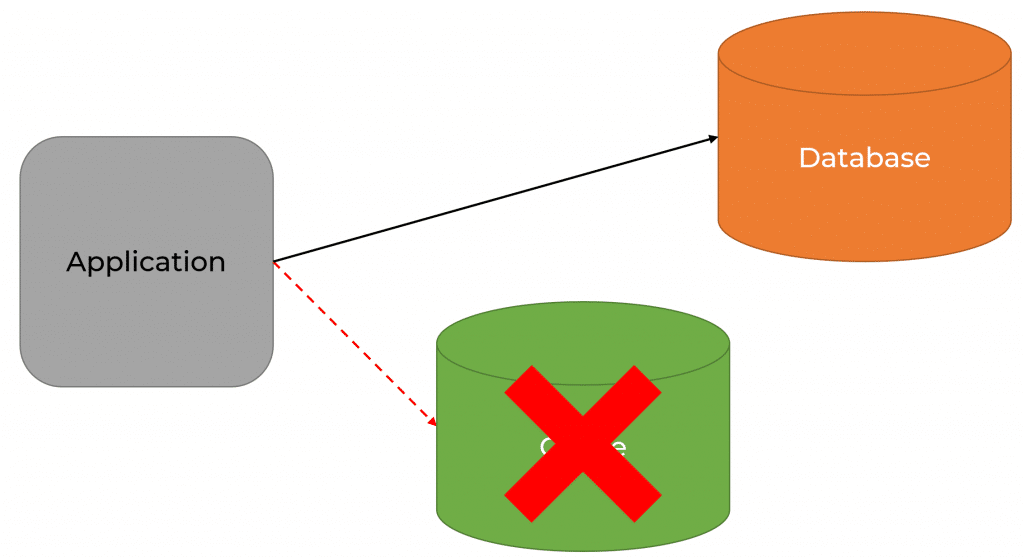

One benefit to the Cache Aside (Lazy Loading) method is that if for whatever reason, you cannot reach the cache, you can fallback to using your database. This would work exactly like a cache miss. You would need to handle the appropriate Exceptions and Timeouts from the cache client to determine the Cache is unavailable, and then go directly to the database and return the value.

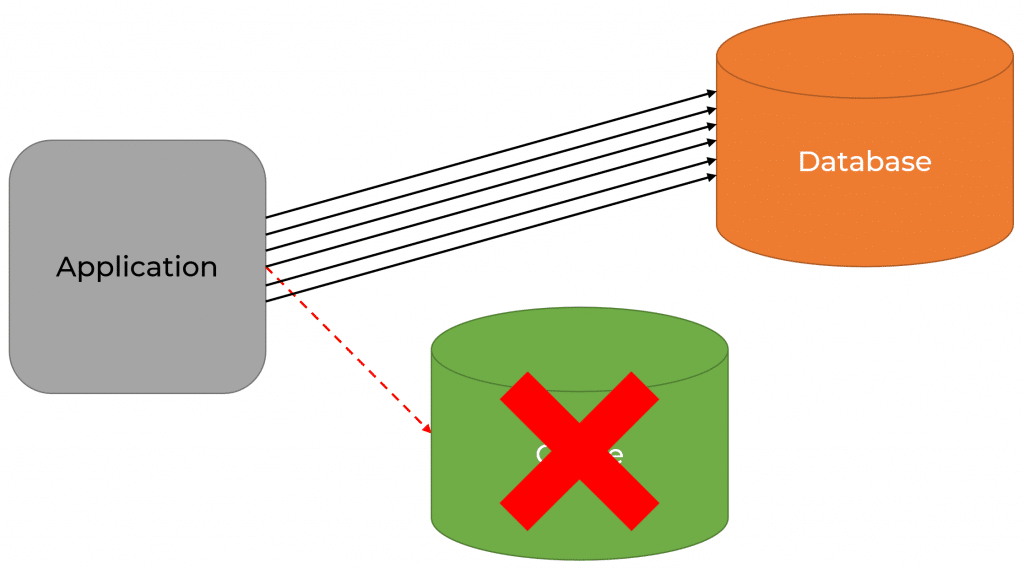

The one thing to very aware of, if you cache is unavailable, that all requests are now going to be fulfilled by the database. This could have a significant performance impact on your primary database. Depending on how many requests are normally handled by your cache are now adding all that extra load to your database.

Complexity of Caching

The complexity of caching isn’t trivial.

Avoid caching if you can.

First, look at the queries to your primary database before going down the path of adding a cache. There are many more complexities that you introduce when adding a cache. Avoid it if you can.